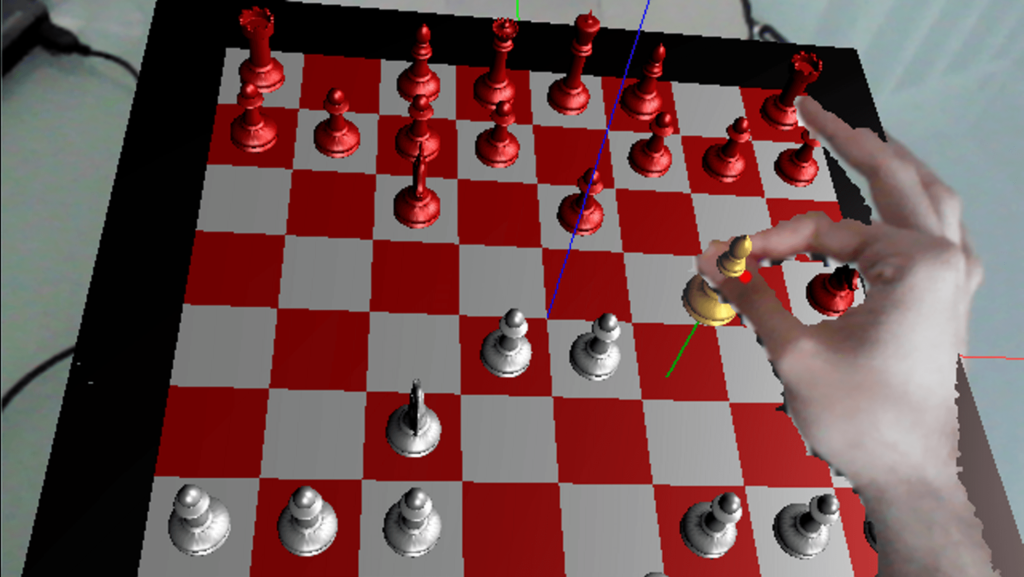

Imagine sitting at your kitchen table and seeing a fully rendered chessboard spring to life in mid-air—complete with pieces you can pick up and move with your bare hands. That’s exactly what I set out to build: an immersive AR chess experience where virtual pieces hover above a real tabletop, and you manipulate them naturally, as if they were physical objects. Using an Intel RealSense depth camera and ArUco markers for spatial tracking, the system blends real and virtual worlds so seamlessly that players forget where the real table ends and the digital board begins.

Under the hood, the application continuously captures RGB-Depth frames via the RealSense SDK, detects and tracks ArUco markers with OpenCV, and reconstructs a stable tabletop coordinate frame. Pinch-gesture recognition—based solely on thumb and forefinger tracking—lets users “grab” virtual pieces without any gloves or controllers. Once a piece is selected, OpenGL renders models and smooth animations directly atop the live camera feed. A robust C++ interface then sends moves to an embedded chess engine, which calculates its response in real time, updating the board and guiding the next interaction.

This Mixed Reality Chess system not only showcases advanced superimposition techniques but also demonstrates how natural hand interactions can drive engagement in AR applications. Informal user trials highlighted the joy of reaching out and “grasping” a knight as it glided across the table, and the seamless AI integration kept matches challenging—even for seasoned players. By uniting motion-capture, computer vision, graphics, and game AI, the project delivers a fully self-contained AR experience that feels both magical and intuitive.

Key Challenges

- Occluded Marker Handling: As players moved pieces, their hands often hid the ArUco markers. I developed a predictive tracking fallback—interpolating marker position during brief occlusions—to maintain stable board alignment.

- Glove-Free Interaction: Detecting pinch gestures purely from depth and RGB data required tuning hand-segmentation algorithms in OpenCV and filtering noisy RealSense data to avoid false grabs.

- Chess Engine Integration: Embedding a high-performance C++ chess engine into an event-driven AR loop meant carefully managing threading and latency so AI move calculations never interrupted the real-time rendering pipeline.